Gene expression programming (GEP) is an evolutionary algorithm that creates computer programs or models. These computer programs are complex tree structures that learn and adapt by changing their sizes, shapes, and composition, much like a living organism. And like living organisms, the computer programs of GEP are also encoded in simple linear chromosomes of fixed length. Thus, GEP is a genotype-phenotype system, benefiting from a simple genome to keep and transmit the genetic information and a complex phenotype to explore the environment and adapt to it.

Background[edit]

Evolutionary algorithms use populations of individuals, select individuals according to fitness, and introduce genetic variation using one or more genetic operators. Their use in artificial computational systems dates back to the 1950s where they were used to solve optimization problems (e.g. Box 1957[1] and Friedman 1959[2]). But it was with the introduction of evolution strategies by Rechenberg in 1965[3] that evolutionary algorithms gained popularity. A good overview text on evolutionary algorithms is the book “An Introduction to Genetic Algorithms” by Mitchell (1996).[4]

Gene expression programming[5] belongs to the family of evolutionary algorithms and is closely related to genetic algorithms and genetic programming. From genetic algorithms it inherited the linear chromosomes of fixed length; and from genetic programming it inherited the expressive parse trees of varied sizes and shapes.

In gene expression programming the linear chromosomes work as the genotype and the parse trees as the phenotype, creating a genotype/phenotype system. This genotype/phenotype system is multigenic, thus encoding multiple parse trees in each chromosome. This means that the computer programs created by GEP are composed of multiple parse trees. Because these parse trees are the result of gene expression, in GEP they are called expression trees.

Encoding: the genotype[edit]

The genome of gene expression programming consists of a linear, symbolic string or chromosome of fixed length composed of one or more genes of equal size. These genes, despite their fixed length, code for expression trees of different sizes and shapes. An example of a chromosome with two genes, each of size 9, is the string (position zero indicates the start of each gene):

012345678012345678L+a-baccd**cLabacd

where “L” represents the natural logarithm function and “a”, “b”, “c”, and “d” represent the variables and constants used in a problem.

Expression trees: the phenotype[edit]

As shown above, the genes of gene expression programming have all the same size. However, these fixed length strings code for expression trees of different sizes. This means that the size of the coding regions varies from gene to gene, allowing for adaptation and evolution to occur smoothly.

For example, the mathematical expression:

can also be represented as an expression tree:

where “Q” represents the square root function.

This kind of expression tree consists of the phenotypic expression of GEP genes, whereas the genes are linear strings encoding these complex structures. For this particular example, the linear string corresponds to:

01234567Q*-+abcd

which is the straightforward reading of the expression tree from top to bottom and from left to right. These linear strings are called k-expressions (from Karva notation).

Going from k-expressions to expression trees is also very simple. For example, the following k-expression:

01234567890Q*b**+baQba

is composed of two different terminals (the variables “a” and “b”), two different functions of two arguments (“*” and “+”), and a function of one argument (“Q”). Its expression gives:

K-expressions and genes[edit]

The k-expressions of gene expression programming correspond to the region of genes that gets expressed. This means that there might be sequences in the genes that are not expressed, which is indeed true for most genes. The reason for these noncoding regions is to provide a buffer of terminals so that all k-expressions encoded in GEP genes correspond always to valid programs or expressions.

The genes of gene expression programming are therefore composed of two different domains – a head and a tail – each with different properties and functions. The head is used mainly to encode the functions and variables chosen to solve the problem at hand, whereas the tail, while also used to encode the variables, provides essentially a reservoir of terminals to ensure that all programs are error-free.

For GEP genes the length of the tail is given by the formula:

where h is the head’s length and nmax is maximum arity. For example, for a gene created using the set of functions F = {Q, +, −, *, /} and the set of terminals T = {a, b}, nmax = 2. And if we choose a head length of 15, then t = 15 (2 − 1) + 1 = 16, which gives a gene length g of 15 + 16 = 31. The randomly generated string below is an example of one such gene:

0123456789012345678901234567890*b+a-aQab+//+b+babbabbbababbaaa

It encodes the expression tree:

which, in this case, only uses 8 of the 31 elements that constitute the gene.

It’s not hard to see that, despite their fixed length, each gene has the potential to code for expression trees of different sizes and shapes, with the simplest composed of only one node (when the first element of a gene is a terminal) and the largest composed of as many nodes as there are elements in the gene (when all the elements in the head are functions with maximum arity).

It’s also not hard to see that it is trivial to implement all kinds of genetic modification (mutation, inversion, insertion, recombination, and so on) with the guarantee that all resulting offspring encode correct, error-free programs.

Multigenic chromosomes[edit]

The chromosomes of gene expression programming are usually composed of more than one gene of equal length. Each gene codes for a sub-expression tree (sub-ET) or sub-program. Then the sub-ETs can interact with one another in different ways, forming a more complex program. The figure shows an example of a program composed of three sub-ETs.

Expression of GEP genes as sub-ETs. a) A three-genic chromosome with the tails shown in bold. b) The sub-ETs encoded by each gene.

In the final program the sub-ETs could be linked by addition or some other function, as there are no restrictions to the kind of linking function one might choose. Some examples of more complex linkers include taking the average, the median, the midrange, thresholding their sum to make a binomial classification, applying the sigmoid function to compute a probability, and so on. These linking functions are usually chosen a priori for each problem, but they can also be evolved elegantly and efficiently by the cellular system[6][7] of gene expression programming.

Cells and code reuse[edit]

In gene expression programming, homeotic genes control the interactions of the different sub-ETs or modules of the main program. The expression of such genes results in different main programs or cells, that is, they determine which genes are expressed in each cell and how the sub-ETs of each cell interact with one another. In other words, homeotic genes determine which sub-ETs are called upon and how often in which main program or cell and what kind of connections they establish with one another.

Homeotic genes and the cellular system[edit]

Homeotic genes have exactly the same kind of structural organization as normal genes and they are built using an identical process. They also contain a head domain and a tail domain, with the difference that the heads contain now linking functions and a special kind of terminals – genic terminals – that represent the normal genes. The expression of the normal genes results as usual in different sub-ETs, which in the cellular system are called ADFs (automatically defined functions). As for the tails, they contain only genic terminals, that is, derived features generated on the fly by the algorithm.

For example, the chromosome in the figure has three normal genes and one homeotic gene and encodes a main program that invokes three different functions a total of four times, linking them in a particular way.

Expression of a unicellular system with three ADFs. a) The chromosome composed of three conventional genes and one homeotic gene (shown in bold). b) The ADFs encoded by each conventional gene. c) The main program or cell.

From this example it is clear that the cellular system not only allows the unconstrained evolution of linking functions but also code reuse. And it shouldn't be hard to implement recursion in this system.

Multiple main programs and multicellular systems[edit]

Multicellular systems are composed of more than one homeotic gene. Each homeotic gene in this system puts together a different combination of sub-expression trees or ADFs, creating multiple cells or main programs.

For example, the program shown in the figure was created using a cellular system with two cells and three normal genes.

Expression of a multicellular system with three ADFs and two main programs. a) The chromosome composed of three conventional genes and two homeotic genes (shown in bold). b) The ADFs encoded by each conventional gene. c) Two different main programs expressed in two different cells.

The applications of these multicellular systems are multiple and varied and, like the multigenic systems, they can be used both in problems with just one output and in problems with multiple outputs.

Other levels of complexity[edit]

The head/tail domain of GEP genes (both normal and homeotic) is the basic building block of all GEP algorithms. However, gene expression programming also explores other chromosomal organizations that are more complex than the head/tail structure. Essentially these complex structures consist of functional units or genes with a basic head/tail domain plus one or more extra domains. These extra domains usually encode random numerical constants that the algorithm relentlessly fine-tunes in order to find a good solution. For instance, these numerical constants may be the weights or factors in a function approximation problem (see the GEP-RNC algorithm below); they may be the weights and thresholds of a neural network (see the GEP-NN algorithm below); the numerical constants needed for the design of decision trees (see the GEP-DT algorithm below); the weights needed for polynomial induction; or the random numerical constants used to discover the parameter values in a parameter optimization task.

The basic gene expression algorithm[edit]

The fundamental steps of the basic gene expression algorithm are listed below in pseudocode:

- 1. Select function set;

- 2. Select terminal set;

- 3. Load dataset for fitness evaluation;

- 4. Create chromosomes of initial population randomly;

- 5. For each program in population:

- a) Express chromosome;

- b) Execute program;

- c) Evaluate fitness;

- 6. Verify stop condition;

- 7. Select programs;

- 8. Replicate selected programs to form the next population;

- 9. Modify chromosomes using genetic operators;

- 10. Go to step 5.

The first four steps prepare all the ingredients that are needed for the iterative loop of the algorithm (steps 5 through 10). Of these preparative steps, the crucial one is the creation of the initial population, which is created randomly using the elements of the function and terminal sets.

Populations of programs[edit]

Like all evolutionary algorithms, gene expression programming works with populations of individuals, which in this case are computer programs. Therefore some kind of initial population must be created to get things started. Subsequent populations are descendants, via selection and genetic modification, of the initial population.

In the genotype/phenotype system of gene expression programming, it is only necessary to create the simple linear chromosomes of the individuals without worrying about the structural soundness of the programs they code for, as their expression always results in syntactically correct programs.

Fitness functions and the selection environment[edit]

Fitness functions and selection environments (called training datasets in machine learning) are the two facets of fitness and are therefore intricately connected. Indeed, the fitness of a program depends not only on the cost function used to measure its performance but also on the training data chosen to evaluate fitness

The selection environment or training data[edit]

The selection environment consists of the set of training records, which are also called fitness cases. These fitness cases could be a set of observations or measurements concerning some problem, and they form what is called the training dataset.

The quality of the training data is essential for the evolution of good solutions. A good training set should be representative of the problem at hand and also well-balanced, otherwise the algorithm might get stuck at some local optimum. In addition, it is also important to avoid using unnecessarily large datasets for training as this will slow things down unnecessarily. A good rule of thumb is to choose enough records for training to enable a good generalization in the validation data and leave the remaining records for validation and testing.

Fitness functions[edit]

Broadly speaking, there are essentially three different kinds of problems based on the kind of prediction being made:

- 1. Problems involving numeric (continuous) predictions;

- 2. Problems involving categorical or nominal predictions, both binomial and multinomial;

- 3. Problems involving binary or Boolean predictions.

The first type of problem goes by the name of regression; the second is known as classification, with logistic regression as a special case where, besides the crisp classifications like “Yes” or “No”, a probability is also attached to each outcome; and the last one is related to Boolean algebra and logic synthesis.

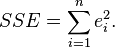

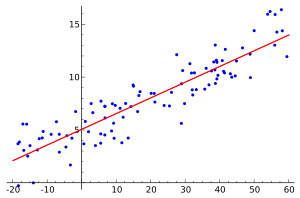

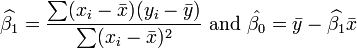

Fitness functions for regression[edit]

In regression, the response or dependent variable is numeric (usually continuous) and therefore the output of a regression model is also continuous. So it’s quite straightforward to evaluate the fitness of the evolving models by comparing the output of the model to the value of the response in the training data.

There are several basic fitness functions for evaluating model performance, with the most common being based on the error or residual between the model output and the actual value. Such functions include the mean squared error, root mean squared error, mean absolute error, relative squared error, root relative squared error, relative absolute error, and others.

All these standard measures offer a fine granularity or smoothness to the solution space and therefore work very well for most applications. But some problems might require a coarser evolution, such as determining if a prediction is within a certain interval, for instance less than 10% of the actual value. However, even if one is only interested in counting the hits (that is, a prediction that is within the chosen interval), making populations of models evolve based on just the number of hits each program scores is usually not very efficient due to the coarse granularity of the fitness landscape. Thus the solution usually involves combining these coarse measures with some kind of smooth function such as the standard error measures listed above.

Fitness functions based on the correlation coefficient and R-square are also very smooth. For regression problems, these functions work best by combining them with other measures because, by themselves, they only tend to measure correlation, not caring for the range of values of the model output. So by combining them with functions that work at approximating the range of the target values, they form very efficient fitness functions for finding models with good correlation and good fit between predicted and actual values.

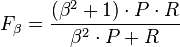

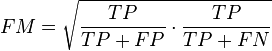

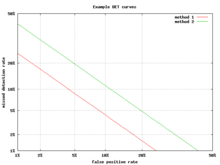

Fitness functions for classification and logistic regression[edit]

The design of fitness functions for classification and logistic regression takes advantage of three different characteristics of classification models. The most obvious is just counting the hits, that is, if a record is classified correctly it is counted as a hit. This fitness function is very simple and works well for simple problems, but for more complex problems or datasets highly unbalanced it gives poor results.

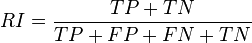

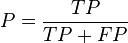

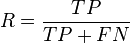

One way to improve this type of hits-based fitness function consists of expanding the notion of correct and incorrect classifications. In a binary classification task, correct classifications can be 00 or 11. The “00” representation means that a negative case (represented by “0”) was correctly classified, whereas the “11” means that a positive case (represented by “1”) was correctly classified. Classifications of the type “00” are called true negatives (TN) and “11” true positives (TP).

There are also two types of incorrect classifications and they are represented by 01 and 10. They are called false positives (FP) when the actual value is 0 and the model predicts a 1; and false negatives (FN) when the target is 1 and the model predicts a 0. The counts of TP, TN, FP, and FN are usually kept on a table known as the confusion matrix.

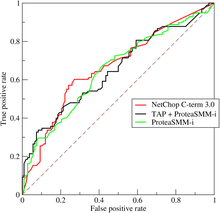

So by counting the TP, TN, FP, and FN and further assigning different weights to these four types of classifications, it is possible to create smoother and therefore more efficient fitness functions. Some popular fitness functions based on the confusion matrix include sensitivity/specificity, recall/precision, F-measure, Jaccard similarity, Matthews correlation coefficient, and cost/gain matrix which combines the costs and gains assigned to the 4 different types of classifications.

These functions based on the confusion matrix are quite sophisticated and are adequate to solve most problems efficiently. But there is another dimension to classification models which is key to exploring more efficiently the solution space and therefore results in the discovery of better classifiers. This new dimension involves exploring the structure of the model itself, which includes not only the domain and range, but also the distribution of the model output and the classifier margin.

By exploring this other dimension of classification models and then combining the information about the model with the confusion matrix, it is possible to design very sophisticated fitness functions that allow the smooth exploration of the solution space. For instance, one can combine some measure based on the confusion matrix with the mean squared error evaluated between the raw model outputs and the actual values. Or combine the F-measure with the R-square evaluated for the raw model output and the target; or the cost/gain matrix with the correlation coefficient, and so on. More exotic fitness functions that explore model granularity include the area under the ROC curve and rank measure.

Also related to this new dimension of classification models, is the idea of assigning probabilities to the model output, which is what is done in logistic regression. Then it is also possible to use these probabilities and evaluate the mean squared error (or some other similar measure) between the probabilities and the actual values, then combine this with the confusion matrix to create very efficient fitness functions for logistic regression. Popular examples of fitness functions based on the probabilities include maximum likelihood estimation and hinge loss.

Fitness functions for Boolean problems[edit]

In logic there is no model structure (as defined above for classification and logistic regression) to explore: the domain and range of logical functions comprises only 0’s and 1’s or false and true. So, the fitness functions available for Boolean algebra can only be based on the hits or on the confusion matrix as explained in the section above.

Selection and elitism[edit]

Roulette-wheel selection is perhaps the most popular selection scheme used in evolutionary computation. It involves mapping the fitness of each program to a slice of the roulette wheel proportional to its fitness. Then the roulette is spun as many times as there are programs in the population in order to keep the population size constant. So, with roulette-wheel selection programs are selected both according to fitness and the luck of the draw, which means that some times the best traits might be lost. However, by combining roulette-wheel selection with the cloning of the best program of each generation, one guarantees that at least the very best traits are not lost. This technique of cloning the best-of-generation program is known as simple elitism and is used by most stochastic selection schemes.

Reproduction with modification[edit]

The reproduction of programs involves first the selection and then the reproduction of their genomes. Genome modification is not required for reproduction, but without it adaptation and evolution won’t take place.

Replication and selection[edit]

The selection operator selects the programs for the replication operator to copy. Depending on the selection scheme, the number of copies one program originates may vary, with some programs getting copied more than once while others are copied just once or not at all. In addition, selection is usually set up so that the population size remains constant from one generation to another.

The replication of genomes in nature is very complex and it took scientists a long time to discover the DNA double helix and propose a mechanism for its replication. But the replication of strings is trivial in artificial evolutionary systems, where only an instruction to copy strings is required to pass all the information in the genome from generation to generation.

The replication of the selected programs is a fundamental piece of all artificial evolutionary systems, but for evolution to occur it needs to be implemented not with the usual precision of a copy instruction, but rather with a few errors thrown in. Indeed, genetic diversity is created with genetic operators such as mutation, recombination, transposition, inversion, and many others.

Mutation[edit]

In gene expression programming mutation is the most important genetic operator.[8] It changes genomes by changing an element by another. The accumulation of many small changes over time can create great diversity.

In gene expression programming mutation is totally unconstrained, which means that in each gene domain any domain symbol can be replaced by another. For example, in the heads of genes any function can be replaced by a terminal or another function, regardless of the number of arguments in this new function; and a terminal can be replaced by a function or another terminal.

Recombination[edit]

Recombination usually involves two parent chromosomes to create two new chromosomes by combining different parts from the parent chromosomes. And as long as the parent chromosomes are aligned and the exchanged fragments are homologous (that is, occupy the same position in the chromosome), the new chromosomes created by recombination will always encode syntactically correct programs.

Different kinds of crossover are easily implemented either by changing the number of parents involved (there’s no reason for choosing only two); the number of split points; or the way one chooses to exchange the fragments, for example, either randomly or in some orderly fashion. For example, gene recombination, which is a special case of recombination, can be done by exchanging homologous genes (genes that occupy the same position in the chromosome) or by exchanging genes chosen at random from any position in the chromosome.

Transposition[edit]

Transposition involves the introduction of an insertion sequence somewhere in a chromosome. In gene expression programming insertion sequences might appear anywhere in the chromosome, but they are only inserted in the heads of genes. This method guarantees that even insertion sequences from the tails result in error-free programs.

For transposition to work properly, it must preserve chromosome length and gene structure. So, in gene expression programming transposition can be implemented using two different methods: the first creates a shift at the insertion site, followed by a deletion at the end of the head; the second overwrites the local sequence at the target site and therefore is easier to implement. Both methods can be implemented to operate between chromosomes or within a chromosome or even within a single gene.

Inversion[edit]

Inversion is an interesting operator, especially powerful for combinatorial optimization.[9] It consists of inverting a small sequence within a chromosome.

In gene expression programming it can be easily implemented in all gene domains and, in all cases, the offspring produced is always syntactically correct. For any gene domain, a sequence (ranging from at least two elements to as big as the domain itself) is chosen at random within that domain and then inverted.

Other genetic operators[edit]

Several other genetic operators exist and in gene expression programming, with its different genes and gene domains, the possibilities are endless. For example, genetic operators such as one-point recombination, two-point recombination, gene recombination, uniform recombination, gene transposition, root transposition, domain-specific mutation, domain-specific inversion, domain-specific transposition, and so on, are easily implemented and widely used.

The GEP-RNC algorithm[edit]

Numerical constants are essential elements of mathematical and statistical models and therefore it is important to allow their integration in the models designed by evolutionary algorithms.

Gene expression programming solves this problem very elegantly through the use of an extra gene domain – the Dc – for handling random numerical constants (RNC). By combining this domain with a special terminal placeholder for the RNCs, a richly expressive system can be created.

Structurally, the Dc comes after the tail, has a length equal to the size of the tail t, and is composed of the symbols used to represent the RNCs.

For example, below is shown a simple chromosome composed of only one gene a head size of 7 (the Dc stretches over positions 15–22):

01234567890123456789012+?*+?**aaa??aaa68083295

where the terminal “?” represents the placeholder for the RNCs. This kind of chromosome is expressed exactly as shown above, giving:

Then the ?’s in the expression tree are replaced from left to right and from top to bottom by the symbols (for simplicity represented by numerals) in the Dc, giving:

The values corresponding to these symbols are kept in an array. (For simplicity, the number represented by the numeral indicates the order in the array.) For instance, for the following 10 element array of RNCs:

- C = {0.611, 1.184, 2.449, 2.98, 0.496, 2.286, 0.93, 2.305, 2.737, 0.755}

the expression tree above gives:

This elegant structure for handling random numerical constants is at the heart of different GEP systems, such as GEP neural networks and GEP decision trees.

Like the basic gene expression algorithm, the GEP-RNC algorithm is also multigenic and its chromosomes are decoded as usual by expressing one gene after another and then linking them all together by the same kind of linking process.

The genetic operators used in the GEP-RNC system are an extension to the genetic operators of the basic GEP algorithm (see above), and they all can be straightforwardly implemented in these new chromosomes. On the other hand, the basic operators of mutation, inversion, transposition, and recombination are also used in the GEP-RNC algorithm. Furthermore, special Dc-specific operators such as mutation, inversion, and transposition, are also used to aid in a more efficient circulation of the RNCs among individual programs. In addition, there is also a special mutation operator that allows the permanent introduction of variation in the set of RNCs. The initial set of RNCs is randomly created at the beginning of a run, which means that, for each gene in the initial population, a specified number of numerical constants, chosen from a certain range, are randomly generated. Then their circulation and mutation is enabled by the genetic operators.

Neural networks[edit]

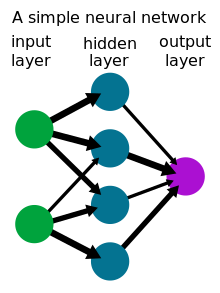

An artificial neural network (ANN or NN) is a computational device that consists of many simple connected units or neurons. The connections between the units are usually weighted by real-valued weights. These weights are the primary means of learning in neural networks and a learning algorithm is usually used to adjust them.

Structurally, a neural network has three different classes of units: input units, hidden units, and output units. An activation pattern is presented at the input units and then spreads in a forward direction from the input units through one or more layers of hidden units to the output units. The activation coming into one unit from other unit is multiplied by the weights on the links over which it spreads. All incoming activation is then added together and the unit becomes activated only if the incoming result is above the unit’s threshold.

In summary, the basic components of a neural network are the units, the connections between the units, the weights, and the thresholds. So, in order to fully simulate an artificial neural network one must somehow encode these components in a linear chromosome and then be able to express them in a meaningful way.

In GEP neural networks (GEP-NN or GEP nets), the network architecture is encoded in the usual structure of a head/tail domain.[10] The head contains special functions/neurons that activate the hidden and output units (in the GEP context, all these units are more appropriately called functional units) and terminals that represent the input units. The tail, as usual, contains only terminals/input units.

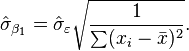

Besides the head and the tail, these neural network genes contain two additional domains, Dw and Dt, for encoding the weights and thresholds of the neural network. Structurally, the Dw comes after the tail and its length dw depends on the head size h and maximum arity nmax and is evaluated by the formula:

The Dt comes after Dw and has a length dt equal to t. Both domains are composed of symbols representing the weights and thresholds of the neural network.

For each NN-gene, the weights and thresholds are created at the beginning of each run, but their circulation and adaptation are guaranteed by the usual genetic operators of mutation, transposition, inversion, and recombination. In addition, special operators are also used to allow a constant flow of genetic variation in the set of weights and thresholds.

For example, below is shown a neural network with two input units (i1 and i2), two hidden units (h1 and h2), and one output unit (o1). It has a total of six connections with six corresponding weights represented by the numerals 1–6 (for simplicity, the thresholds are all equal to 1 and are omitted):

This representation is the canonical neural network representation, but neural networks can also be represented by a tree, which, in this case, corresponds to:

where “a” and “b” represent the two inputs i1 and i2 and “D” represents a function with connectivity two. This function adds all its weighted arguments and then thresholds this activation in order to determine the forwarded output. This output (zero or one in this simple case) depends on the threshold of each unit, that is, if the total incoming activation is equal to or greater than the threshold, then the output is one, zero otherwise.

The above NN-tree can be linearized as follows:

0123456789012DDDabab654321

where the structure in positions 7–12 (Dw) encodes the weights. The values of each weight are kept in an array and retrieved as necessary for expression.

As a more concrete example, below is shown a neural net gene for the exclusive-or problem. It has a head size of 3 and Dw size of 6:

0123456789012DDDabab393257

Its expression results in the following neural network:

which, for the set of weights:

- W = {−1.978, 0.514, −0.465, 1.22, −1.686, −1.797, 0.197, 1.606, 0, 1.753}

it gives:

which is a perfect solution to the exclusive-or function.

Besides simple Boolean functions with binary inputs and binary outputs, the GEP-nets algorithm can handle all kinds of functions or neurons (linear neuron, tanh neuron, atan neuron, logistic neuron, limit neuron, radial basis and triangular basis neurons, all kinds of step neurons, and so on). Also interesting is that the GEP-nets algorithm can use all these neurons together and let evolution decide which ones work best to solve the problem at hand. So, GEP-nets can be used not only in Boolean problems but also in logistic regression, classification, and regression. In all cases, GEP-nets can be implemented not only with multigenic systems but also cellular systems, both unicellular and multicellular. Furthermore, multinomial classification problems can also be tackled in one go by GEP-nets both with multigenic systems and multicellular systems.

Decision trees[edit]

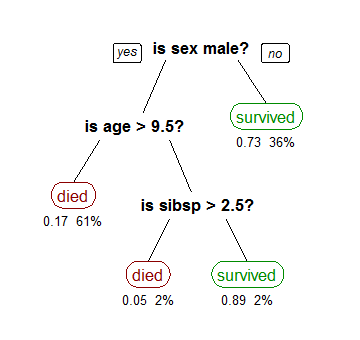

Decision trees (DT) are classification models where a series of questions and answers are mapped using nodes and directed edges.

Decision trees have three types of nodes: a root node, internal nodes, and leaf or terminal nodes. The root node and all internal nodes represent test conditions for different attributes or variables in a dataset. Leaf nodes specify the class label for all different paths in the tree.

Most decision tree induction algorithms involve selecting an attribute for the root node and then make the same kind of informed decision about all the nodes in a tree.

Decision trees can also be created by gene expression programming,[11] with the advantage that all the decisions concerning the growth of the tree are made by the algorithm itself without any kind of human input.

There are basically two different types of DT algorithms: one for inducing decision trees with only nominal attributes and another for inducing decision trees with both numeric and nominal attributes. This aspect of decision tree induction also carries to gene expression programming and there are two GEP algorithms for decision tree induction: the evolvable decision trees (EDT) algorithm for dealing exclusively with nominal attributes and the EDT-RNC (EDT with random numerical constants) for handling both nominal and numeric attributes.

In the decision trees induced by gene expression programming, the attributes behave as function nodes in the basic gene expression algorithm, whereas the class labels behave as terminals. This means that attribute nodes have also associated with them a specific arity or number of branches that will determine their growth and, ultimately, the growth of the tree. Class labels behave like terminals, which means that for a k-class classification task, a terminal set with k terminals is used, representing the k different classes.

The rules for encoding a decision tree in a linear genome are very similar to the rules used to encode mathematical expressions (see above). So, for decision tree induction the genes also have a head and a tail, with the head containing attributes and terminals and the tail containing only terminals. This again ensures that all decision trees designed by GEP are always valid programs. Furthermore, the size of the tail t is also dictated by the head size h and the number of branches of the attribute with more branches nmax and is evaluated by the equation:

For example, consider the decision tree below to decide whether to play outside:

It can be linearly encoded as:

01234567HOWbaaba

where “H” represents the attribute Humidity, “O” the attribute Outlook, “W” represents Windy, and “a” and “b” the class labels "Yes" and "No" respectively. Note that the edges connecting the nodes are properties of the data, specifying the type and number of branches of each attribute, and therefore don’t have to be encoded.

The process of decision tree induction with gene expression programming starts, as usual, with an initial population of randomly created chromosomes. Then the chromosomes are expressed as decision trees and their fitness evaluated against a training dataset. According to fitness they are then selected to reproduce with modification. The genetic operators are exactly the same that are used in a conventional unigenic system, for example, mutation, inversion, transposition, and recombination.

Decision trees with both nominal and numeric attributes are also easily induced with gene expression programming using the framework described above for dealing with random numerical constants. The chromosomal architecture includes an extra domain for encoding random numerical constants, which are used as thresholds for splitting the data at each branching node. For example, the gene below with a head size of 5 (the Dc starts at position 16):

012345678901234567890WOTHabababbbabba46336

encodes the decision tree shown below:

In this system, every node in the head, irrespective of its type (numeric attribute, nominal attribute, or terminal), has associated with it a random numerical constant, which for simplicity in the example above is represented by a numeral 0–9. These random numerical constants are encoded in the Dc domain and their expression follows a very simple scheme: from top to bottom and from left to right, the elements in Dc are assigned one-by-one to the elements in the decision tree. So, for the following array of RNCs:

- C = {62, 51, 68, 83, 86, 41, 43, 44, 9, 67}

the decision tree above results in:

which can also be represented more colorfully as a conventional decision tree:

Criticism[edit]

GEP has been criticized for not being a major improvement over other genetic programming techniques. In many experiments, it did not perform better than existing methods.[12]

Software[edit]

Commercial applications[edit]

- GeneXproTools

- GeneXproTools is a predictive analytics suite developed by Gepsoft. GeneXproTools modeling frameworks include logistic regression, classification, regression, time series prediction, and logic synthesis. GeneXproTools implements the basic gene expression algorithm and the GEP-RNC algorithm, both used in all the modeling frameworks of GeneXproTools.

Open source libraries[edit]

- GEP4J – GEP for Java Project

- Created by Jason Thomas, GEP4J is an open-source implementation of gene expression programming in Java. It implements different GEP algorithms, including evolving decision trees (with nominal, numeric, or mixed attributes) and automatically defined functions. GEP4J is hosted at Google Code.

- PyGEP – Gene Expression Programming for Python

- Created by Ryan O'Neil with the goal to create a simple library suitable for the academic study of gene expression programming in Python, aiming for ease of use and rapid implementation. It implements standard multigenic chromosomes and the genetic operators mutation, crossover, and transposition. PyGEP is hosted at Google Code.

- jGEP – Java GEP toolkit

- Created by Matthew Sottile to rapidly build Java prototype codes that use GEP, which can then be written in a language such as C or Fortran for real speed. jGEP is hosted at SourceForge.

Further reading[edit]

See also[edit]

References[edit]

- ^ Box, G. E. P., 1957. Evolutionary operation: A method for increasing industrial productivity. Applied Statistics, 6, 81–101.

- ^ Friedman, G. J., 1959. Digital simulation of an evolutionary process. General Systems Yearbook, 4, 171–184.

- ^ Rechenberg, Ingo (1973). Evolutionsstrategie. Stuttgart: Holzmann-Froboog. ISBN 3-7728-0373-3.

- ^ Mitchell, Melanie (1996). 'An Introduction to Genetic Algorithms. Cambridge, MA: MIT Press.

- ^ Ferreira, C. (2001). "Gene Expression Programming: A New Adaptive Algorithm for Solving Problems". Complex Systems, Vol. 13, issue 2: 87–129.

- ^ Ferreira, C. (2002). "Gene Expression Programming: Mathematical Modeling by an Artificial Intelligence". Portugal: Angra do Heroismo. ISBN 972-95890-5-4.

- ^ Ferreira, C. (2006). "Automatically Defined Functions in Gene Expression Programming". In N. Nedjah, L. de M. Mourelle, A. Abraham, eds., Genetic Systems Programming: Theory and Experiences, Studies in Computational Intelligence, Vol. 13, pp. 21–56, Springer-Verlag.

- ^ Ferreira, C. (2002). "Mutation, Transposition, and Recombination: An Analysis of the Evolutionary Dynamics". In H. J. Caulfield, S.-H. Chen, H.-D. Cheng, R. Duro, V. Honavar, E. E. Kerre, M. Lu, M. G. Romay, T. K. Shih, D. Ventura, P. P. Wang, Y. Yang, eds., Proceedings of the 6th Joint Conference on Information Sciences, 4th International Workshop on Frontiers in Evolutionary Algorithms, pages 614–617, Research Triangle Park, North Carolina, USA.

- ^ Ferreira, C. (2002). "Combinatorial Optimization by Gene Expression Programming: Inversion Revisited". In J. M. Santos and A. Zapico, eds., Proceedings of the Argentine Symposium on Artificial Intelligence, pages 160–174, Santa Fe, Argentina.

- ^ Ferreira, C. (2006). "Designing Neural Networks Using Gene Expression Programming". In A. Abraham, B. de Baets, M. Köppen, and B. Nickolay, eds., Applied Soft Computing Technologies: The Challenge of Complexity, pages 517–536, Springer-Verlag.

- ^ Ferreira, C. (2006). Gene Expression Programming: Mathematical Modeling by an Artificial Intelligence. Springer-Verlag. ISBN 3-540-32796-7.

- ^ Oltean, M.; Grosan, C. (2003), "A comparison of several linear genetic programming techniques", Complex Systems 14 (4): 285––314

External links[edit]

where

where

as input and results in a workflow net being constructed.

as input and results in a workflow net being constructed. of tasks.

of tasks. is the set of all tasks which occur in at least one trace

is the set of all tasks which occur in at least one trace is the set of all tasks which occur trace-initially

is the set of all tasks which occur trace-initially is the set of all tasks which occur trace-terminally

is the set of all tasks which occur trace-terminally first, the latter three can be constructed therefrom)

first, the latter three can be constructed therefrom) iff b directly precedes a in some trace

iff b directly precedes a in some trace iff

iff

iff

iff

iff

iff

is the set of all pairs

is the set of all pairs  of maximal sets of tasks such that

of maximal sets of tasks such that  and

and  contain any members of

contain any members of  and

and is a subset of

is a subset of

contains one place

contains one place  for every member of

for every member of  and the output place

and the output place

is the union of the following:

is the union of the following:

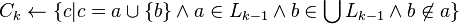

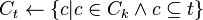

, the Apriori algorithm identifies the item sets which are subsets of at least

, the Apriori algorithm identifies the item sets which are subsets of at least  from item sets of length

from item sets of length  . Then it prunes the candidates which have an infrequent sub pattern. According to the

. Then it prunes the candidates which have an infrequent sub pattern. According to the  . Usual set theoretic notation is employed, though note that

. Usual set theoretic notation is employed, though note that  is the candidate set for level

is the candidate set for level ![count[c]](http://upload.wikimedia.org/math/a/2/8/a284e9fea0f8e3a2185d04dc91330e7c.png) accesses a field of the data structure that represents candidate set

accesses a field of the data structure that represents candidate set  , which is initially assumed to be zero. Many details are omitted below, usually the most important part of the implementation is the data structure used for storing the candidate sets, and counting their frequencies.

, which is initially assumed to be zero. Many details are omitted below, usually the most important part of the implementation is the data structure used for storing the candidate sets, and counting their frequencies.

![count[c] \gets count[c]+1](http://upload.wikimedia.org/math/0/6/8/06877396723fd139a75e2c0eb74db9f9.png)

![L_k \gets \{ c |c \in C_k \and ~ count[c] \geq \epsilon \}](http://upload.wikimedia.org/math/c/0/7/c074e501c8d3df66a7899d6bbb652e1e.png)

of its proper subsets.

of its proper subsets.

found in the sales data of a supermarket would indicate that if a customer buys onions and potatoes together, he or she is likely to also buy hamburger meat. Such information can be used as the basis for decisions about marketing activities such as, e.g., promotional

found in the sales data of a supermarket would indicate that if a customer buys onions and potatoes together, he or she is likely to also buy hamburger meat. Such information can be used as the basis for decisions about marketing activities such as, e.g., promotional  be a set of

be a set of  binary attributes called items. Let

binary attributes called items. Let  be a set of transactions called the database. Each transaction in

be a set of transactions called the database. Each transaction in  has a unique transaction ID and contains a subset of the items in

has a unique transaction ID and contains a subset of the items in  . A rule is defined as an implication of the form

. A rule is defined as an implication of the form  where

where  and

and  . The sets of items (for short itemsets)

. The sets of items (for short itemsets)  and

and  are called antecedent (left-hand-side or LHS) and consequent (right-hand-side or RHS) of the rule respectively.

are called antecedent (left-hand-side or LHS) and consequent (right-hand-side or RHS) of the rule respectively. and a small database containing the items (1 codes presence and 0 absence of an item in a transaction) is shown in the table to the right. An example rule for the supermarket could be

and a small database containing the items (1 codes presence and 0 absence of an item in a transaction) is shown in the table to the right. An example rule for the supermarket could be  meaning that if butter and bread are bought, customers also buy milk.

meaning that if butter and bread are bought, customers also buy milk. of an itemset

of an itemset  has a support of

has a support of  since it occurs in 20% of all transactions (1 out of 5 transactions).

since it occurs in 20% of all transactions (1 out of 5 transactions). . For example, the rule

. For example, the rule  has a confidence of

has a confidence of  in the database, which means that for 50% of the transactions containing milk and bread the rule is correct (50% of the times a customer buys milk and bread, butter is bought as well). Be careful when reading the expression: here supp(X∪Y) means "support for occurrences of transactions where X and Y both appear", not "support for occurrences of transactions where either X or Y appears", the latter interpretation arising because set union is equivalent to

in the database, which means that for 50% of the transactions containing milk and bread the rule is correct (50% of the times a customer buys milk and bread, butter is bought as well). Be careful when reading the expression: here supp(X∪Y) means "support for occurrences of transactions where X and Y both appear", not "support for occurrences of transactions where either X or Y appears", the latter interpretation arising because set union is equivalent to  is a set of preconditions, and thus becomes more restrictive as it grows (instead of more inclusive).

is a set of preconditions, and thus becomes more restrictive as it grows (instead of more inclusive). , the probability of finding the RHS of the rule in transactions under the condition that these transactions also contain the LHS.

, the probability of finding the RHS of the rule in transactions under the condition that these transactions also contain the LHS. or the ratio of the observed support to that expected if X and Y were

or the ratio of the observed support to that expected if X and Y were  .

. . The rule

. The rule  , and can be interpreted as the ratio of the expected frequency that X occurs without Y (that is to say, the frequency that the rule makes an incorrect prediction) if X and Y were independent divided by the observed frequency of incorrect predictions. In this example, the conviction value of 1.2 shows that the rule

, and can be interpreted as the ratio of the expected frequency that X occurs without Y (that is to say, the frequency that the rule makes an incorrect prediction) if X and Y were independent divided by the observed frequency of incorrect predictions. In this example, the conviction value of 1.2 shows that the rule

items. This is called the downward-closure property.

items. This is called the downward-closure property. (excluding the empty set which is not a valid itemset). Although the size of the powerset grows exponentially in the number of items

(excluding the empty set which is not a valid itemset). Although the size of the powerset grows exponentially in the number of items

, which makes them too slow for large data sets. For some special cases, optimal efficient methods (of complexity

, which makes them too slow for large data sets. For some special cases, optimal efficient methods (of complexity  ) are known: SLINK

) are known: SLINK

, and produces a hierarchical result related to that of

, and produces a hierarchical result related to that of

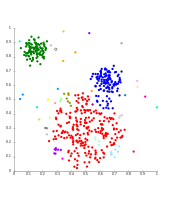

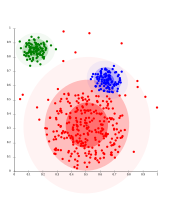

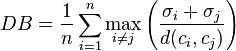

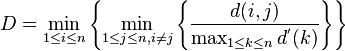

is the

is the  ,

,  is the average distance of all elements in cluster

is the average distance of all elements in cluster  is the distance between centroids

is the distance between centroids  and

and  . Since algorithms that produce clusters with low intra-cluster distances (high intra-cluster similarity) and high inter-cluster distances (low inter-cluster similarity) will have a low Davies–Bouldin index, the clustering algorithm that produces a collection of clusters with the smallest

. Since algorithms that produce clusters with low intra-cluster distances (high intra-cluster similarity) and high inter-cluster distances (low inter-cluster similarity) will have a low Davies–Bouldin index, the clustering algorithm that produces a collection of clusters with the smallest

represents the distance between clusters

represents the distance between clusters  and

and  , and

, and  measures the intra-cluster distance of cluster

measures the intra-cluster distance of cluster

is the number of

is the number of  is the number of

is the number of  is the number of

is the number of  is the number of

is the number of  . Let

. Let

is the

is the  is the

is the

,

,  . In other words,

. In other words,  allocates an increasing amount of weight to recall in the final F-measure.

allocates an increasing amount of weight to recall in the final F-measure.

index is the geometric mean of the

index is the geometric mean of the  and

and  .

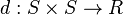

. of

of  points along with an integer

points along with an integer  , and pairwise distances among the points in

, and pairwise distances among the points in  . We can then define a distance function to be any function

. We can then define a distance function to be any function  such that for distinct

such that for distinct  , we have

, we have  if and only if

if and only if  , and

, and  - in other words

- in other words  must be symmetric and two points have distance zero if only if they are the same point.

must be symmetric and two points have distance zero if only if they are the same point. that takes a distance function

that takes a distance function  and an integer

and an integer  and returns a

and returns a  will be called its "clusters". Two clustering functions are equivalent if and only if they output the same partitioning on all values of

will be called its "clusters". Two clustering functions are equivalent if and only if they output the same partitioning on all values of  to be the same function with all distances multiplied by

to be the same function with all distances multiplied by  .

. , we have

, we have

) be the set of all possible outputs while varying

) be the set of all possible outputs while varying  , there should exist a

, there should exist a  . By varying distances amongst points, we should be able to obtain all possible

. By varying distances amongst points, we should be able to obtain all possible  is a

is a  ; and (b) for all

; and (b) for all  . In other words,

. In other words,

.

.

.

. .

.

bounds: An upper bound is kept on the distribution of a statistic calculated when the null hypothesis is true. Nodes are pruned when it is no longer possible to meet this cutoff.

bounds: An upper bound is kept on the distribution of a statistic calculated when the null hypothesis is true. Nodes are pruned when it is no longer possible to meet this cutoff.

in the same space. Each datapoint

in the same space. Each datapoint  has a host node, namely the closest node

has a host node, namely the closest node  .

. ,

, .

. , are connected by elastic edges. Call this set of pairs

, are connected by elastic edges. Call this set of pairs  . Some triplets of nodes,

. Some triplets of nodes,  , form bending ribs. Call this set of triplets

, form bending ribs. Call this set of triplets  .

. ,

, ,

, and

and  are the stretching and bending moduli respectively. The stretching energy is sometimes referred to as the "membrane" term, while the bending energy is referred to as the "thin plate" term.

are the stretching and bending moduli respectively. The stretching energy is sometimes referred to as the "membrane" term, while the bending energy is referred to as the "thin plate" term.

is determined by the mechanical equilibrium of the elastic map, i.e. its location is such that it minimizes the total energy

is determined by the mechanical equilibrium of the elastic map, i.e. its location is such that it minimizes the total energy  .

. minimization of the quadratic functional

minimization of the quadratic functional  ;

;

,

,

be the distance of the object

be the distance of the object  to the k nearest neighbor. Note that the set of the k nearest neighbors includes all objects at this distance, which can in the case of a "tie" be more than k objects. We denote the set of k nearest neighbors as

to the k nearest neighbor. Note that the set of the k nearest neighbors includes all objects at this distance, which can in the case of a "tie" be more than k objects. We denote the set of k nearest neighbors as  .

.

is the true distance of the two objects, but at least the

is the true distance of the two objects, but at least the  of

of

indicates that the object is comparable to its neighbors (and thus not an outlier). A value below

indicates that the object is comparable to its neighbors (and thus not an outlier). A value below

![[0:1]](http://upload.wikimedia.org/math/6/c/6/6c6526273c612767dbca7ac35fc98daa.png) .

. where r is sparse. Generally speaking, n is assumed to be larger than d to allow the freedom for a sparse representation.

where r is sparse. Generally speaking, n is assumed to be larger than d to allow the freedom for a sparse representation. i)

i)

be a sequence of states

be a sequence of states  belonging to a finite set of possible states. Let us denote

belonging to a finite set of possible states. Let us denote  the sequence space, i.e. the set of all possible sequences of states.

the sequence space, i.e. the set of all possible sequences of states. . In the most simple approach, a set composed of only three basic operations to transform sequences is used:

. In the most simple approach, a set composed of only three basic operations to transform sequences is used: is inserted in the sequence

is inserted in the sequence

and

and is replaced (substituted) by state

is replaced (substituted) by state  ,

,  .

. is associated to each operator. Given two sequences

is associated to each operator. Given two sequences  and

and  , the idea is to measure the cost of obtaining

, the idea is to measure the cost of obtaining  be a sequence of operators such that the application of all the operators of this sequence

be a sequence of operators such that the application of all the operators of this sequence  where

where  denotes the compound operator. To this set we associate the cost

denotes the compound operator. To this set we associate the cost  , that represents the total cost of the transformation. One should consider at this point that there might exist different such sequences

, that represents the total cost of the transformation. One should consider at this point that there might exist different such sequences

is by definition nonnegative since it is the sum of positive costs, and trivially

is by definition nonnegative since it is the sum of positive costs, and trivially  if and only if

if and only if  , that is there is no cost. The distance function is

, that is there is no cost. The distance function is  ; the term indel cost usually refers to the common cost of insertion and deletion.

; the term indel cost usually refers to the common cost of insertion and deletion. .

.

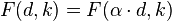

) by the formula

) by the formula  , where:

, where:

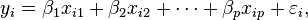

is a

is a  , and two parameters,

, and two parameters,  and

and  :

:

is an error term and the subscript

is an error term and the subscript

, is the difference between the value of the dependent variable predicted by the model,

, is the difference between the value of the dependent variable predicted by the model,  , and the true value of the dependent variable,

, and the true value of the dependent variable,

.

.

is the

is the  is the mean of the

is the mean of the  values.

values.

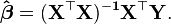

is

is  . Thus X is n×p, Y is n×1, and

. Thus X is n×p, Y is n×1, and

, where

, where  is the sample size,

is the sample size,  is the number of observations needed to reach the desired precision if the model had only one independent variable.

is the number of observations needed to reach the desired precision if the model had only one independent variable. .

.

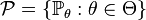

where

where  , where

, where  is a parameter and

is a parameter and  is the feasible region of parameters, which is a subset of d-dimensional

is the feasible region of parameters, which is a subset of d-dimensional  .

. . A

. A  as

as  and

and  as

as

selected to suit the problem.

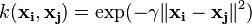

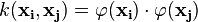

selected to suit the problem. of images of feature vectors that occur in the data base. With this choice of a hyperplane, the points

of images of feature vectors that occur in the data base. With this choice of a hyperplane, the points  Note that if

Note that if

, a set of n points of the form

, a set of n points of the form

belongs. Each

belongs. Each  from those having

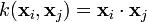

from those having  . Any hyperplane can be written as the set of points

. Any hyperplane can be written as the set of points  satisfying

satisfying

denotes the

denotes the  the

the  determines the offset of the hyperplane from the origin along the normal vector

determines the offset of the hyperplane from the origin along the normal vector

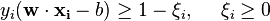

, so we want to minimize

, so we want to minimize  . As we also have to prevent data points from falling into the margin, we add the following constraint: for each

. As we also have to prevent data points from falling into the margin, we add the following constraint: for each  of the first class

of the first class of the second.

of the second.

)

) )

)

(the factor of 1/2 being used for mathematical convenience) without changing the solution (the minimum of the original and the modified equation have the same w and b). This is a

(the factor of 1/2 being used for mathematical convenience) without changing the solution (the minimum of the original and the modified equation have the same w and b). This is a

, the previous constrained problem can be expressed as

, the previous constrained problem can be expressed as![\min_{\mathbf{w},b } \max_{\boldsymbol{\alpha}\geq 0 } \left\{ \frac{1}{2}\|\mathbf{w}\|^2 - \sum_{i=1}^{n}{\alpha_i[y_i(\mathbf{w}\cdot \mathbf{x_i} - b)-1]} \right\}](http://upload.wikimedia.org/math/c/9/1/c91514d03dd2ea611781b38ec03fea25.png)

do not matter since we must set the corresponding

do not matter since we must set the corresponding

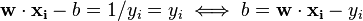

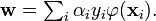

are exactly the support vectors, which lie on the margin and satisfy

are exactly the support vectors, which lie on the margin and satisfy  . From this one can derive that the support vectors also satisfy

. From this one can derive that the support vectors also satisfy

. In practice, it is more robust to average over all

. In practice, it is more robust to average over all  support vectors:

support vectors:

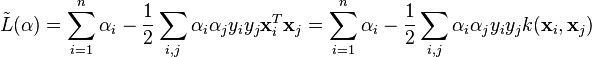

and substituting

and substituting  , one can show that the dual of the SVM reduces to the following optimization problem:

, one can show that the dual of the SVM reduces to the following optimization problem:

.

. can be computed thanks to the

can be computed thanks to the

in the primal optimization problem. The corresponding dual is identical to the dual given above without the equality constraint

in the primal optimization problem. The corresponding dual is identical to the dual given above without the equality constraint

, which measure the degree of misclassification of the data

, which measure the degree of misclassification of the data

)

)

![\min_{\mathbf{w},\mathbf{\xi}, b } \max_{\boldsymbol{\alpha},\boldsymbol{\beta} } \left \{ \frac{1}{2}\|\mathbf{w}\|^2 +C \sum_{i=1}^n \xi_i - \sum_{i=1}^{n}{\alpha_i[y_i(\mathbf{w}\cdot \mathbf{x_i} - b) -1 + \xi_i]} - \sum_{i=1}^{n} \beta_i \xi_i \right \}](http://upload.wikimedia.org/math/2/7/d/27d4897c82071e84609de0de7a046566.png)

.

.

, for

, for  Sometimes parametrized using

Sometimes parametrized using

, for some (not every)

, for some (not every)  and

and

by the equation

by the equation  . The value w is also in the transformed space, with

. The value w is also in the transformed space, with  Dot products with w for classification can again be computed by the kernel trick, i.e.

Dot products with w for classification can again be computed by the kernel trick, i.e.  . However, there does not in general exist a value w' such that

. However, there does not in general exist a value w' such that

;

;  . Typically, each combination of parameter choices is checked using

. Typically, each combination of parameter choices is checked using

)

) )

)